VC dimension

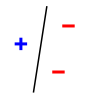

In Vapnik–Chervonenkis theory, the Vapnik–Chervonenkis (VC) dimension is a measure of the capacity (complexity, expressive power, richness, or flexibility) of a space of functions that can be learned by a statistical classification algorithm. It is defined as the cardinality of the largest set of points that the algorithm can shatter. It was originally defined by Vladimir Vapnik and Alexey Chervonenkis. In Vapnik–Chervonenkis theory, the Vapnik–Chervonenkis (VC) dimension is a measure of the capacity (complexity, expressive power, richness, or flexibility) of a space of functions that can be learned by a statistical classification algorithm. It is defined as the cardinality of the largest set of points that the algorithm can shatter. It was originally defined by Vladimir Vapnik and Alexey Chervonenkis. Informally, the capacity of a classification model is related to how complicated it can be. For example, consider the thresholding of a high-degree polynomial: if the polynomial evaluates above zero, that point is classified as positive, otherwise as negative. A high-degree polynomial can be wiggly, so it can fit a given set of training points well. But one can expect that the classifier will make errors on other points, because it is too wiggly. Such a polynomial has a high capacity. A much simpler alternative is to threshold a linear function. This function may not fit the training set well, because it has a low capacity. This notion of capacity is made rigorous below. Let H {displaystyle H} be a set family (a set of sets) and C {displaystyle C} a set. Their intersection is defined as the following set-family: We say that a set C {displaystyle C} is shattered by H {displaystyle H} if H ∩ C {displaystyle Hcap C} contains all the subsets of C {displaystyle C} , i.e.: The VC dimension of H {displaystyle H} is the largest integer D {displaystyle D} such that there exists a set C {displaystyle C} with cardinality D {displaystyle D} that is shattered by H {displaystyle H} . If arbitrarily large subsets can be shattered, the VC dimension is ∞ {displaystyle infty } . A classification model f {displaystyle f} with some parameter vector θ {displaystyle heta } is said to shatter a set of data points ( x 1 , x 2 , … , x n ) {displaystyle (x_{1},x_{2},ldots ,x_{n})} if, for all assignments of labels to those points, there exists a θ {displaystyle heta } such that the model f {displaystyle f} makes no errors when evaluating that set of data points. The VC dimension of a model f {displaystyle f} is the maximum number of points that can be arranged so that f {displaystyle f} shatters them. More formally, it is the maximum cardinal D {displaystyle D} such that some data point set of cardinality D {displaystyle D} can be shattered by f {displaystyle f} . 1. f {displaystyle f} is a constant classifier (with no parameters). Its VC-dimension is 0 since it cannot shatter even a single point. In general, the VC dimension of a finite classification model, which can return at most 2 d {displaystyle 2^{d}} different classifiers, is at most d {displaystyle d} (this is an upper bound on the VC dimension; the Sauer–Shelah lemma gives a lower bound on the dimension). 2. f {displaystyle f} is a single-parametric threshold classifier on real numbers; i.e, for a certain threshold θ {displaystyle heta } , the classifier f θ {displaystyle f_{ heta }} returns 1 if the input number is larger than θ {displaystyle heta } and 0 otherwise. The VC dimension of f {displaystyle f} is 1 because: (a) It can shatter a single point. For every point x {displaystyle x} , a classifier f θ {displaystyle f_{ heta }} labels it as 0 if θ > x {displaystyle heta >x} and labels it as 1 if θ < x {displaystyle heta <x} . (b) It cannot shatter any set of two points. For every set of two numbers, if the smaller is labeled 1, then the larger must also be labeled 1, so not all labelings are possible.